When Bits Split Atoms

The 1962 Cuban Missile Crisis is the closest humanity has ever come to a nuclear exchange.[1] We know that weapons were nearly used by both a Soviet sub with a nuclear torpedo and American fighters sent to defend an off-course U2, armed with nothing but nuclear air-to-air missiles (did they have to put nukes in everything?).

The U2 incident even convinced then-Secretary of Defense Robert McNamara that it was all over; upon hearing of it he reportedly "turned absolutely white, and yelled hysterically, 'This means [nuclear] war with the Soviet Union!"'

It illustrates not only how close we have come, but just how many stakeholders could have started a nuclear war. There is this claim that only the highest levels of government could possibly order a nuclear strike, aided by the theatrics of the “nuclear football”. From what we know historically, that has been a complete and total lie.

MAD - Hair-trigger Weapons are a Feature, Not a Bug

The principle of Mutually Assured Destruction[2] requires that a nuclear first strike be responded to with annihilating force. Therefore even if communication with command and control were severed, subordinates would still need to be able to retaliate. During the Cold War, both sides accomplished this with “predelegation” which ended up allowing hundreds, if not thousands, of people to launch nuclear weapons without higher orders.[3]

You may say that was way back in 1962. Is predelegation better now? As one DoD source put it: “It’s still sensitive.” Consider that we only know about predelegation in 1962 precisely because it was from long ago and therefore have old leaks and declassifications. At that time there were only three nuclear weapons states. Now there are three times as many, not even including nuclear sharing.

The takeaway should be just how startlingly easy it is to push one of the numerous nuclear buttons. Which is where AI comes in.

AI’s Low-Hanging Fruit

Folks like Stephen Hawking and Elon Musk have highlighted the risk posed by a superintelligent AI, but what would the destructive mechanism be? A popular example is an incentive misalignment that causes an AI to uncritically turn the world into paperclips because it’s instructed to “make as many paper clips as possible”.

This is certainly a future possibility, and algorithms can break our markets if not our bones. For the near future, however, there is only one system capable of immediate worldwide destruction, and it’s been set on a hair-trigger: Nuclear weapons.

This is especially relevant because an AI catastrophe is more likely earlier than later. Early on, humans will have had less experience with AI and less time to properly align it. At that point, the lowest hanging fruit for AI to kill everyone would be to just push one of the many “Kill Everyone” buttons already conveniently available, rather than trying to invent paperclip nano-factories, etc. [4]

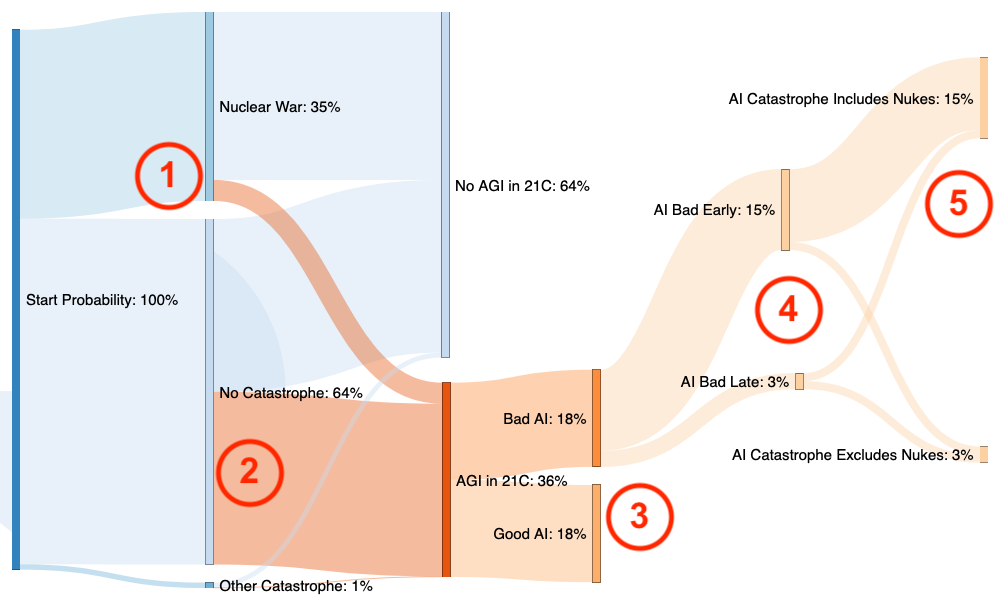

The below Sankey diagram shows how I rate the probability of nuclear and AI outcomes. The exact numbers aren’t important,[5] you can even try playing around with them yourself (appendix).

My reasoning:

- If there’s a pre-AI nuclear war, there’s a much lower chance of having AI in the 21st century. AI existing at all is conditional on civilization not ending beforehand.[6]

- If there’s no 21C catastrophe, I estimate a 50/50 chance of inventing an Artificial General Intelligence (AGI).

- If there is an AGI, I estimate a 50/50 chance of it being bad.

- If it’s bad, it’s most likely to be catastrophic early (because humans will be inexperienced with AI, plus the Lindy effect).

- If it’s catastrophic early, it’s most likely to use nukes i.e. the only sufficiently powerful system (or at least the lowest-hanging fruit)

Thus most of the AI catastrophe risk involves nukes.

Nuclear Security: Highly Rated, Yet Underrated

Most of us know about nuclear war risk, but we leave it to governments, treaties, and Big Organizations to manage this. It seems more appropriate to protest about climate change or COVID policies than to comment on the fact that nearly everyone reading this has a nuclear weapon pointing at them right now.

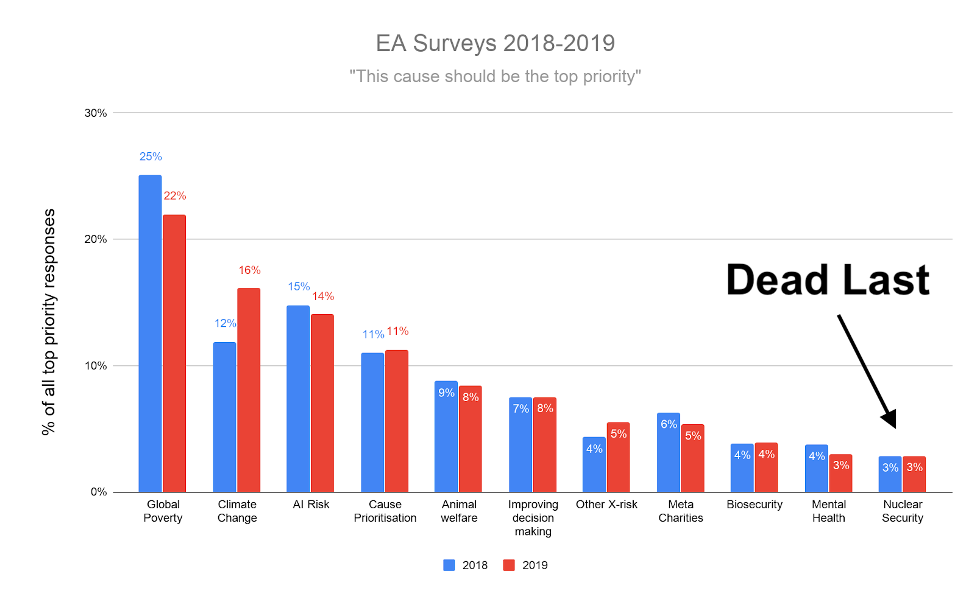

For example, this survey of Effective Altruists, a group with a significant number of “Longtermists”:

Nuclear security is the lowest-rated priority.

Why is this? From 80,000 Hours: “Nuclear security is already a major topic of interest for governments, making it harder to have an effect on the situation”.

It’s true that at a high level smart and important people are working on this, but what about the general public? Do you know which political parties have better or worse nuclear security policies? Which politicians? Which countries? Exactly what does better or worse even mean?

Now repeat these questions with “climate change” or “abortion” and it will become clear that nuclear security is hardly in the public consciousness at all.

Solution: Baby-Proof the Nukes

What to do about this? How to address this still-underrated problem? I suggest treating the coming of AI like we would the coming of a new baby.

Clearly you prioritize Baby Safety. You’ll want to teach it how to stay safe: don’t take candy from strangers, look both ways before crossing the street, no unhealthy obsession with paperclips. That said, there’s only so much you can do before the baby arrives. A concrete thing you can do in advance though is baby-proof your home. You soften sharp edges, install baby gates, and make sure dangerous things aren’t just lying around.

Similarly, we must AI-proof our home planet by not leaving a nuclear Sword of Damocles hanging around.[7]

What does AI-proofing the nukes look like? Two measurable steps and AI-relevant steps:[8]

- Reduce the total number of warheads to below the threshold needed to cause a catastrophic nuclear winter. Split this quota across nations so that everyone will still have some of our beloved a-bombs, but at least if an AI detonates them all, it will be short of armageddon.[9]

- No-first-use pledges. This may sound aspirational, but in fact, China and India currently have a no-first-use policy, and Russia had one from 1982 to 1993. Bilateral pledges can be used to reduce the risk between great powers while maintaining most of the strategic advantages of a nuclear state. A no-first-use pledge means that faulty intelligence, misinformation, or impersonation could not initiate a nuclear launch in the absence of a detonation (or possibly a launch warning). Even if a bomb goes off, there will be slightly more cause to investigate before pursuing retaliation.

I know the Nuke-AI angle may seem like an unoriginal take, we did all see Terminator. But risk profiles are usually Pareto distributed, where a small proportion of the factors accounts for a large proportion of the risk. Clichéd as it may be, nuclear war has historically been our biggest existential risk, and as long as thousands of weapons are pointed at one another, it is likely to remain that way.

Making an AGI might change the triggerman, but it’s still nuclear holocaust at the end of the trigger.

I say “exchange” rather than “war” because humanity has already had a (one-sided) nuclear war. Hiroshima by John Hersey contains among the best descriptions of this. ↩︎

MAD was named ironically to illustrate the insanity of the doctrine and has been used unironically ever since. ↩︎

The two-man rule won’t save you either. MAD incentivizes you to always retaliate, even at the individual level. Daniel Ellsberg (of Pentagon Papers fame) witnessed that even with the two-man rule, responsible pairs generally had an unofficial system so one man could get both authorization keys if needed. ↩︎

Even if the AI just wants paperclips, it could be that there is more steel available for paperclips if everybody were vaporized. ↩︎

A 35% chance of nuclear war is about right for the 20th century but hopefully will turn out too high for the 21st century. ↩︎

Conversely, nuclear risk would likely be increased by instability caused by other factors like AI, climate change, bioweapons, etc. ↩︎

This same line of reasoning applies to other dangerous-for-AI-to-have technologies like autonomous weapons systems. ↩︎

I won’t suggest air-gapping the weapons as serious mitigation. They’ll still be human-connected which won’t be much of an obstacle to a truly intelligent AI. If anything, it’s likely that humans will be the weakest link. ↩︎

There is some debate on how likely or bad nuclear winter would be. Regardless, let’s agree that 2,000 nukes going off is better than the current ~10,000 nukes going off and that either would still be the worst thing to ever happen. ↩︎

Thanks to Uri, Kaamya, and Applied Divinity Studies for feedback on drafts of this, and The Doomsday Machine by Daniel Ellsberg for inspiration.